| version | author | date | changelog |

|---|---|---|---|

Version 0.1 |

(2015-11-23) |

main part of the doc done |

|

Version 0.2 |

(2015-11-28) |

add "references" |

|

Version 0.3 |

(2016-01-04) |

add qemu-kvm params breakdown(TODO) |

Kernel-based Virtual Machine (KVM) is a virtualization infrastructure for the Linux kernel that turns it into a hypervisor. It has been gaining industry traction and market share in a wide variety of software deployments in just a few short years. KVM turns to be a very interesting virtualization topic and is well positioned for the future.

Juniper MX86(VMX: Virtual MX) is a virtual version of the MX Series 3D Universal Edge Router that is installed on an industry-standard x86 server running a Linux operating system, applicable third-party software, and the KVM hypervisor. It is one of industry-level implementations of router based on KVM technology.

about This doc :

This doc records some learning and testing notes about KVM/MX86 technology.

-

illustration of various installation procedures

-

illustration of verification procedure

-

illustration of features involved in VMX installation process, mostly from KVM/virtualization technology’s perspecive

-

some case studies

This doc can be used as reference when you:

-

read the official VMX documents, use this as an "accompanying" doc

-

need a quick list of commands to verify a feature or troubleshoot an issue

-

when run into issues, need a "working example" as a reference

-

breakdown of qemu command parameters

-

customerization of the installation script to make it startup friendly

-

libvirt API scripting

-

DPDK programming

-

internal crabs? (flow cache, junos troubleshooting, internal packet flow, etc)

Part 1: VMX installation

1. prepare for the installation

1.1. identify current system info

before starting the installation, we need to collect some basic information about the server that VMX is about to be built on. At mininum, make sure the server complies to the "Minimum Hardware and Software Requirements".

-

server manufacturer info

-

operating system

-

cpu and memory

-

NIC/controller

-

NIC driver

-

VT-d/IOMMU

-

kvm/qemu version

-

libvirt version

1.1.1. server Manufacturer/model/SN

-

this server is a

ProLiant BL660c Gen8server. -

chassis SN is

USE4379WSS

same info can be acquired from HP ILO, but command from ssh session is

more convenient.

|

1 ping@trinity:~$ sudo dmidecode |sed -n '/System Info/,/^$/p'

2 System Information

3 Manufacturer: HP

4 Product Name: ProLiant BL660c Gen8 #<------

5 Version: Not Specified

6 Serial Number: USE4379WSS #<------

7 UUID: 31393736-3831-5355-4534-333739575353

8 Wake-up Type: Power Switch

9 SKU Number: 679118-B21

10 Family: ProLiantdmidecode -t 1 will print same info:

1 ping@trinity:~$ sudo dmidecode -t 1

2 Handle 0x0100, DMI type 1, 27 bytes

3 System Information

4 Manufacturer: HP

5 Product Name: ProLiant BL660c Gen8

6 Version: Not Specified

7 Serial Number: USE4379WSS

8 UUID: 31393736-3831-5355-4534-333739575353

9 Wake-up Type: Power Switch

10 SKU Number: 679118-B21

11 Family: ProLiant

-t is handy , but the use of sed is a more general way to extract a

part of text from long text output of any command.

|

1.1.2. Operating System

-

linux distribution: ubuntu 14.04.2

-

linux kernel in use:

3.19.0-25-generic

1ping@trinity:~$ lsb_release -a

2No LSB modules are available.

3Distributor ID: Ubuntu

4Description: Ubuntu 14.04.2 LTS #<------

5Release: 14.04

6Codename: trusty

7

8ping@trinity:~$ uname -a

9Linux trinity 3.19.0-25-generic #26~14.04.1-Ubuntu SMP Fri Jul 24 21:16:20 UTC 2015 x86_64 x86_64 x86_64 GNU/Linux

10 ^^^^^^^^^|

there are many other alternative commands to print OS version/release info: |

to list all kernels installed currently in the server:

1ping@trinity:~$ dpkg --get-selections | grep linux-image

2linux-image-3.13.0-32-generic install #<------

3linux-image-3.16.0-30-generic install #<------

4linux-image-3.19.0-25-generic install #<------

5linux-image-extra-3.13.0-32-generic install

6linux-image-extra-3.16.0-30-generic install

7linux-image-extra-3.19.0-25-generic install

8linux-image-generic-lts-utopic installIn this server multiple verions of linux kernel have been installed, including

our target kernel 3.13.0-32-generic. There is no need to install the the

kernel again - we only need to select the right one and reboot the system with

it.

1.1.3. cpu and memory

this server’s CPU and memory info:

-

CPU model: Intel® Xeon® CPU E5-4627 v2 @ 3.30GHz (Ivy bridge)

-

number of "CPU processors": 4

-

number of "physical cores" per CPU processor: 8

-

VT-x (not VT-d) enabled

-

This CPU does not support HT(HyperThreading), see this spec

-

32 32G DDR3 memory, 500+G total memory available

1ping@trinity:~$ lscpu

2Architecture: x86_64

3CPU op-mode(s): 32-bit, 64-bit

4Byte Order: Little Endian

5CPU(s): 32 #<----(1)

6On-line CPU(s) list: 0-31

7Thread(s) per core: 1 #<----(2)

8Core(s) per socket: 8

9Socket(s): 4 #<----(4)

10NUMA node(s): 4

11Vendor ID: GenuineIntel

12CPU family: 6

13Model: 62

14Stepping: 4

15CPU MHz: 3299.797

16BogoMIPS: 6605.01

17Virtualization: VT-x #<----(3)

18L1d cache: 32K

19L1i cache: 32K

20L2 cache: 256K

21L3 cache: 16384K

22NUMA node0 CPU(s): 0-7

23NUMA node1 CPU(s): 8-15

24NUMA node2 CPU(s): 16-23

25NUMA node3 CPU(s): 24-31| 1 | total number of CPU cores |

| 2 | hyperthreading is not enabled or not supported in this server |

| 3 | VT-x is enabled |

| 4 | max number of sockets available (to hold CPU) in the server |

1ping@trinity:~$ grep -m1 "model name" /proc/cpuinfo

2model name : Intel(R) Xeon(R) CPU E5-4627 v2 @ 3.30GHz #<------

3

4ping@trinity:~$ cat /proc/cpuinfo | sed -e '/^$/,$d'

5processor : 0

6vendor_id : GenuineIntel

7cpu family : 6

8model : 62

9model name : Intel(R) Xeon(R) CPU E5-4627 v2 @ 3.30GHz #<------

10stepping : 4

11microcode : 0x427

12cpu MHz : 3299.797

13cache size : 16384 KB

14physical id : 0

15siblings : 8

16core id : 0

17cpu cores : 8

18apicid : 0

19initial apicid : 0

20fpu : yes

21fpu_exception : yes

22cpuid level : 13

23wp : yes

24flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr (1)

25 pge mca cmov pat pse36 clflush dts acpi mmx fxsr

26 sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp (2)

27 lm constant_tsc arch_perfmon pebs bts rep_good

28 nopl xtopology nonstop_tsc aperfmperf eagerfpu

29 pni pclmulqdq dtes64 monitor ds_cpl vmx smx est (3)

30 tm2 ssse3 cx16 xtpr pdcm pcid dca sse4_1 sse4_2

31 x2apic popcnt tsc_deadline_timer aes xsave avx

32 f16c rdrand lahf_lm ida arat epb pln pts dtherm (4)

33 tpr_shadow vnmi flexpriority ept vpid fsgsbase

34 smep erms xsaveopt

35bugs :

36bogomips : 6599.59

37clflush size : 64

38cache_alignment : 64

39address sizes : 46 bits physical, 48 bits virtual

40power management:| 1 | pse flag indicate 2M hugepage support |

| 2 | pdpe1gb flag indicate 1G hugepage support |

| 3 | vmx flag indicate VT-x capability |

| 4 | rdrand flag, required by current VMX implementation, supported in Ivy

Bridge CPU and not in Sandy Bridge CPU |

ping@trinity: free -h

total used free shared buffers cached

Mem: 503G 13G 490G 1.5M 176M 6.9G

-/+ buffers/cache: 5.9G 497G

Swap: 14G 0B 14G

dmidecode version of the cpu information captured is shown below:ping@trinity: sudo dmidecode -t 4

# dmidecode 2.12

SMBIOS 2.8 present.

Handle 0x0400, DMI type 4, 42 bytes

Processor Information

Socket Designation: Proc 1

Type: Central Processor

Family: Xeon

Manufacturer: Intel

ID: E4 06 03 00 FF FB EB BF

Signature: Type 0, Family 6, Model 62, Stepping 4

Flags:

FPU (Floating-point unit on-chip)

VME (Virtual mode extension)

DE (Debugging extension)

PSE (Page size extension)

TSC (Time stamp counter)

MSR (Model specific registers)

PAE (Physical address extension)

MCE (Machine check exception)

CX8 (CMPXCHG8 instruction supported)

APIC (On-chip APIC hardware supported)

SEP (Fast system call)

MTRR (Memory type range registers)

PGE (Page global enable)

MCA (Machine check architecture)

CMOV (Conditional move instruction supported)

PAT (Page attribute table)

PSE-36 (36-bit page size extension)

CLFSH (CLFLUSH instruction supported)

DS (Debug store)

ACPI (ACPI supported)

MMX (MMX technology supported)

FXSR (FXSAVE and FXSTOR instructions supported)

SSE (Streaming SIMD extensions)

SSE2 (Streaming SIMD extensions 2)

SS (Self-snoop)

HTT (Multi-threading)

TM (Thermal monitor supported)

PBE (Pending break enabled)

Version: Intel(R) Xeon(R) CPU E5-4627 v2 @ 3.30GHz

Voltage: 1.4 V

External Clock: 100 MHz

Max Speed: 4800 MHz

Current Speed: 3300 MHz

Status: Populated, Enabled

Upgrade: Socket LGA2011

L1 Cache Handle: 0x0710

L2 Cache Handle: 0x0720

L3 Cache Handle: 0x0730

Serial Number: Not Specified

Asset Tag: Not Specified

Part Number: Not Specified

Core Count: 8

Core Enabled: 8

Thread Count: 8

Characteristics:

64-bit capable

Handle 0x0401, DMI type 4, 42 bytes

Processor Information

Socket Designation: Proc 2

Type: Central Processor

......

Handle 0x0402, DMI type 4, 42 bytes

Processor Information

Socket Designation: Proc 3

......

Handle 0x0403, DMI type 4, 42 bytes

Processor Information

Socket Designation: Proc 4

......

dmidecode version of the memory data is: dmidecode -t 17:ping@trinity: sudo dmidecode -t 17

# dmidecode 2.12

SMBIOS 2.8 present.

Handle 0x1100, DMI type 17, 40 bytes

Memory Device

Array Handle: 0x1000

Error Information Handle: Not Provided

Total Width: 72 bits

Data Width: 64 bits

Size: 32 GB #<------

Form Factor: DIMM

Set: None

Locator: PROC 1 DIMM 1

Bank Locator: Not Specified

Type: DDR3

Type Detail: Synchronous

Speed: 1866 MHz

Manufacturer: HP

Serial Number: Not Specified

Asset Tag: Not Specified

Part Number: 712384-081

Rank: 4

Configured Clock Speed: 1866 MHz

Minimum voltage: 1.500 V

Maximum voltage: 1.500 V

Configured voltage: 1.500 V

Handle 0x1101, DMI type 17, 40 bytes

Memory Device

Array Handle: 0x1000

Error Information Handle: Not Provided

Total Width: 72 bits

Data Width: 64 bits

Size: No Module Installed

Form Factor: DIMM

Set: 1

Locator: PROC 1 DIMM 2

Bank Locator: Not Specified

Type: DDR3

Type Detail: Synchronous

Speed: Unknown

Manufacturer: UNKNOWN

Serial Number: Not Specified

Asset Tag: Not Specified

Part Number: NOT AVAILABLE

Rank: Unknown

Configured Clock Speed: Unknown

Minimum voltage: Unknown

Maximum voltage: Unknown

Configured voltage: Unknown

Handle 0x1102, DMI type 17, 40 bytes

Memory Device

......

Set: 2

Locator: PROC 1 DIMM 3

......

......

Handle 0x111F, DMI type 17, 40 bytes

Memory Device

Array Handle: 0x1003

Error Information Handle: Not Provided

Total Width: 72 bits

Data Width: 64 bits

Size: 32 GB

Form Factor: DIMM

Set: 31

Locator: PROC 4 DIMM 8

Bank Locator: Not Specified

Type: DDR3

Type Detail: Synchronous

Speed: 1866 MHz

Manufacturer: HP

Serial Number: Not Specified

Asset Tag: Not Specified

Part Number: 712384-081

Rank: 4

Configured Clock Speed: 1866 MHz

Minimum voltage: 1.500 V

Maximum voltage: 1.500 V

Configured voltage: 1.500 V

1.1.4. network adapter/controller

-

two type of HP NIC adapters were equiped:

-

HP 560M adapter,

-

ixgbe kernel driver module that came with linux kernel is in use

-

SR-IOV supported

-

by default VF has not yet been configured

-

-

adapter/interface/PCI address mapping table of all physical network interfaces in this server:

Table 1. server interface table NO. "PCI address" adapter interface name 1

02:00.0

560FLB

em9

2

02:00.1

560FLB

em10

3

06:00.0

560M

p2p1

4

06:00.1

560M

p2p2

5

21:00.0

560FLB

em1

6

21:00.1

560FLB

em2

7

23:00.0

560M

p3p1

8

23:00.1

560M

p3p2

[lspci]

lspci is a linux utility for displaying information about PCI buses in the

system and devices connected to them.

1ping@trinity:~$ lspci | grep -i ethernet

202:00.0 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01)

302:00.1 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01)

406:00.0 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01)

506:00.1 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01)

621:00.0 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01)

721:00.1 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01)

823:00.0 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01)

923:00.1 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01)the prefix number string of each line is a "PCI address":

02:00.0 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01) ^ ^ ^ | | | | | function (port): 0-7 | | | slot (NIC): 0-1f | bus: 0-ff

there is also a "domain" before "bus:slot.function", usually with a value

0000 and is ignored. see man lspci option -s and -D.

ping@trinity:~$ sudo lspci -vs 02:00.0

02:00.0 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01) (2)

Subsystem: Hewlett-Packard Company Ethernet 10Gb 2-port 560FLB Adapter (1)

Flags: bus master, fast devsel, latency 0, IRQ 64

Memory at ef700000 (32-bit, non-prefetchable) [size=1M]

I/O ports at 4000 [size=32]

Memory at ef6f0000 (32-bit, non-prefetchable) [size=16K]

[virtual] Expansion ROM at ef400000 [disabled] [size=512K]

Capabilities: [40] Power Management version 3

Capabilities: [50] MSI: Enable- Count=1/1 Maskable+ 64bit+

Capabilities: [70] MSI-X: Enable+ Count=64 Masked-

Capabilities: [a0] Express Endpoint, MSI 00

Capabilities: [e0] Vital Product Data

Capabilities: [100] Advanced Error Reporting

Capabilities: [140] Device Serial Number 00-00-00-ff-ff-00-00-00

Capabilities: [150] Alternative Routing-ID Interpretation (ARI)

Capabilities: [160] Single Root I/O Virtualization (SR-IOV) (4)

Kernel driver in use: ixgbe (3)

ping@trinity:~$ sudo lspci -vs 06:00.0

06:00.0 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01) (2)

Subsystem: Hewlett-Packard Company Ethernet 10Gb 2-port 560M Adapter (1)

Physical Slot: 2

Flags: bus master, fast devsel, latency 0, IRQ 136

Memory at eff00000 (32-bit, non-prefetchable) [size=1M]

I/O ports at 6000 [size=32]

Memory at efef0000 (32-bit, non-prefetchable) [size=16K]

[virtual] Expansion ROM at efc00000 [disabled] [size=512K]

Capabilities: [40] Power Management version 3

Capabilities: [50] MSI: Enable- Count=1/1 Maskable+ 64bit+

Capabilities: [70] MSI-X: Enable+ Count=64 Masked-

Capabilities: [a0] Express Endpoint, MSI 00

Capabilities: [e0] Vital Product Data

Capabilities: [100] Advanced Error Reporting

Capabilities: [140] Device Serial Number 00-00-00-ff-ff-00-00-00

Capabilities: [150] Alternative Routing-ID Interpretation (ARI)

Capabilities: [160] Single Root I/O Virtualization (SR-IOV) (4)

Kernel driver in use: ixgbe (3)

ping@trinity:~$ sudo lspci -vs 21:* | grep -iE "controller|adapter"

21:00.0 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01) (2)

Subsystem: Hewlett-Packard Company Ethernet 10Gb 2-port 560FLB Adapter (1)

21:00.1 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01)

Subsystem: Hewlett-Packard Company Ethernet 10Gb 2-port 560FLB Adapter

ping@trinity:~$ sudo lspci -vs 23:* | grep -iE "controller|adapter"

23:00.0 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01)

Subsystem: Hewlett-Packard Company Ethernet 10Gb 2-port 560M Adapter

23:00.1 Ethernet controller: Intel Corporation 82599 10 Gigabit Dual Port Backplane Connection (rev 01)

Subsystem: Hewlett-Packard Company Ethernet 10Gb 2-port 560M Adapter

| 1 | adapter vendor info |

| 2 | controller vendor info |

| 3 | current driver in use is ixgbe |

| 4 | driver kernel module support SR-IOV feature |

1.1.5. ixgbe kernel driver

-

current ixgbe driver of this server is the one that came with linux kernel

3.19.1:-

ixgbe version 4.0.1-k

-

support following capabilities:

-

max_vfsparameter: max 63 VF allowed per port (default 0: VF won’t be enabled by default) -

allow_unsupported_sfpparameter: unsupported/untested SFP allowed -

debugoption

-

-

-

the README.txt file from VMX installation package contains more info about the ixgbe driver

ping@trinity:~$ cat /sys/module/ixgbe/version 4.0.1-k

1ping@trinity:~$ modinfo ixgbe

2filename:

3 /lib/modules/3.19.0-25-generic/kernel/drivers/net/ethernet/intel/ixgbe/ixgbe.ko

4version: 4.0.1-k #<------

5license: GPL

6description: Intel(R) 10 Gigabit PCI Express Network Driver

7author: Intel Corporation, <linux.nics@intel.com>

8srcversion: 44CBFE422F8BAD726E61653

9alias: pci:v00008086d000015ABsv*sd*bc*sc*i*

10alias: pci:v00008086d000015AAsv*sd*bc*sc*i*

11alias: pci:v00008086d00001563sv*sd*bc*sc*i*

12alias: pci:v00008086d00001560sv*sd*bc*sc*i*

13alias: pci:v00008086d0000154Asv*sd*bc*sc*i*

14alias: pci:v00008086d00001557sv*sd*bc*sc*i*

15alias: pci:v00008086d00001558sv*sd*bc*sc*i*

16alias: pci:v00008086d0000154Fsv*sd*bc*sc*i*

17alias: pci:v00008086d0000154Dsv*sd*bc*sc*i*

18alias: pci:v00008086d00001528sv*sd*bc*sc*i*

19alias: pci:v00008086d000010F8sv*sd*bc*sc*i*

20alias: pci:v00008086d0000151Csv*sd*bc*sc*i*

21alias: pci:v00008086d00001529sv*sd*bc*sc*i*

22alias: pci:v00008086d0000152Asv*sd*bc*sc*i*

23alias: pci:v00008086d000010F9sv*sd*bc*sc*i*

24alias: pci:v00008086d00001514sv*sd*bc*sc*i*

25alias: pci:v00008086d00001507sv*sd*bc*sc*i*

26alias: pci:v00008086d000010FBsv*sd*bc*sc*i*

27alias: pci:v00008086d00001517sv*sd*bc*sc*i*

28alias: pci:v00008086d000010FCsv*sd*bc*sc*i*

29alias: pci:v00008086d000010F7sv*sd*bc*sc*i*

30alias: pci:v00008086d00001508sv*sd*bc*sc*i*

31alias: pci:v00008086d000010DBsv*sd*bc*sc*i*

32alias: pci:v00008086d000010F4sv*sd*bc*sc*i*

33alias: pci:v00008086d000010E1sv*sd*bc*sc*i*

34alias: pci:v00008086d000010F1sv*sd*bc*sc*i*

35alias: pci:v00008086d000010ECsv*sd*bc*sc*i*

36alias: pci:v00008086d000010DDsv*sd*bc*sc*i*

37alias: pci:v00008086d0000150Bsv*sd*bc*sc*i*

38alias: pci:v00008086d000010C8sv*sd*bc*sc*i*

39alias: pci:v00008086d000010C7sv*sd*bc*sc*i*

40alias: pci:v00008086d000010C6sv*sd*bc*sc*i*

41alias: pci:v00008086d000010B6sv*sd*bc*sc*i*

42depends: mdio,ptp,dca,vxlan

43intree: Y

44vermagic: 3.19.0-25-generic SMP mod_unload modversions

45signer: Magrathea: Glacier signing key

46sig_key: 6A:AA:11:D1:8C:2D:3A:40:B1:B4:DB:E5:BF:8A:D6:56:DD:F5:18:38

47sig_hashalgo: sha512

48parm: max_vfs:Maximum number of virtual functions to allocate per

49 physical function - default is zero and maximum value is 63. (Deprecated)

50 (uint)

51parm: allow_unsupported_sfp:Allow unsupported and untested SFP+

52 modules on 82599-based adapters (uint)

53parm: debug:Debug level (0=none,...,16=all) (int)1.1.6. VT-d/IOMMU

Intel VT-d feaure is supported in this server’s current OS kernel.

1ping@trinity:~$ less /boot/config-3.19.0-25-generic | grep -i iommu

2CONFIG_GART_IOMMU=y

3CONFIG_CALGARY_IOMMU=y

4CONFIG_CALGARY_IOMMU_ENABLED_BY_DEFAULT=y

5CONFIG_IOMMU_HELPER=y

6CONFIG_VFIO_IOMMU_TYPE1=m

7CONFIG_IOMMU_API=y

8CONFIG_IOMMU_SUPPORT=y #<------

9CONFIG_AMD_IOMMU=y

10CONFIG_AMD_IOMMU_STATS=y

11CONFIG_AMD_IOMMU_V2=m

12CONFIG_INTEL_IOMMU=y #<------

13# CONFIG_INTEL_IOMMU_DEFAULT_ON is not set

14CONFIG_INTEL_IOMMU_FLOPPY_WA=y

15# CONFIG_IOMMU_DEBUG is not set

16# CONFIG_IOMMU_STRESS is not set

17

18ping@trinity:~$ grep -i remap /boot/config-3.13.0-32-generic

19CONFIG_HAVE_IOREMAP_PROT=y

20CONFIG_IRQ_REMAP=y #<------

21

22ping@ubuntu1:~$ grep -i pci_stub /boot/config-3.13.0-32-generic

23CONFIG_PCI_STUB=m #<------

a more "general" way to print kernel info is: less /boot/config-`uname -r`

|

1.1.7. kvm/qemu software version

-

qemu version 2.0.0 (Debian 2.0.0+dfsg-2ubuntu1.19)

-

kvm version (same as linux kernel version)

This looks OK comparing with the "Minimum Hardware and Software" from the release note:

VirtualizationQEMU-KVM 2.0.0+dfsg-2ubuntu1.11 or later

To verify qemu/kvm version and hardware acceleration support:

-

qemu version:

1ping@trinity:~$ qemu-system-x86_64 --version 2QEMU emulator version 2.0.0 (Debian 2.0.0+dfsg-2ubuntu1.19), Copyright (c) 2003-2008 Fabrice Bellardor

1ping@trinity:~$ kvm --version 2QEMU emulator version 2.0.0 (Debian 2.0.0+dfsg-2ubuntu1.19), Copyright (c) 2003-2008 Fabrice Bellard -

KVM kernel module info:

1ping@trinity:~$ modinfo kvm 2filename: /lib/modules/3.19.0-25-generic/kernel/arch/x86/kvm/kvm.ko 3license: GPL 4author: Qumranet 5srcversion: F58A0F8858A02EFA0549DE5 6depends: 7intree: Y 8vermagic: 3.19.0-25-generic SMP mod_unload modversions 9signer: Magrathea: Glacier signing key 10sig_key: 6A:AA:11:D1:8C:2D:3A:40:B1:B4:DB:E5:BF:8A:D6:56:DD:F5:18:38 11sig_hashalgo: sha512 12parm: allow_unsafe_assigned_interrupts:Enable device assignment on platforms without interrupt remapping support. (bool) 13parm: ignore_msrs:bool 14parm: min_timer_period_us:uint 15parm: tsc_tolerance_ppm:uint -

HW acceleration OK:

ping@trinity:~$ kvm-ok INFO: /dev/kvm exists KVM acceleration can be used ping@MX86-host-BL660C-B1: ls -l /dev/kvm crw-rw---- 1 root kvm 10, 232 Nov 5 11:35 /dev/kvm

1.1.8. libvirt

-

current libvirt version is 1.2.2

-

this needs to be upgraded to required libvirt version.

1ping@ubuntu:~$ libvirtd --version

2libvirtd (libvirt) 1.2.2

libvirt 1.2.2 misses some of bug fixes of features that are important for

VMX. One of these are numatune, which is used to "pin" vCPUs of guest VM to

physcial CPUs all in one NUMA node, hence reducing the NUMA misses that is

one of the main contributor of performance impact.

|

Now after identifying the server HW/SW configuration, the checklist looks:

-

server manufacturer info

-

operating system

-

cpu and memory

-

NIC/controller

-

NIC driver

-

VT-d/IOMMU

-

kvm/qemu version

-

libvirt version

those checked mark indicate those items that do not meet the requirement and need some change.

1.2. adjust the system

after collecting the server’s current configuration, we need to change some setting according to the Preparing the System to Install vMX portion in the VMX document.

1.2.1. BIOS setting

following (Intel) virtualization features are required to setup VMX and need to be enabled from within BIOS, if not yet.

-

VT-x

-

VT-d

-

SR-IOV

-

HyperThreading

| the requirment may change in different VMX release |

enter BIOS setting:

|

even in same hardware, different version of BIOS might look a little bit different. In this system we have BIOS version "I32" Here are more details of current BIOS info in this server: labroot@MX86-host-BL660C-B1:~/vmx_20141216$ sudo dmidecode # dmidecode 2.12 SMBIOS 2.8 present. 227 structures occupying 7860 bytes. Table at 0xBFBDB000. Handle 0x0000, DMI type 0, 24 bytes

BIOS Information

Vendor: HP

Version: I32 #<------

Release Date: 02/10/2014

Address: 0xF0000

Runtime Size: 64 kB

ROM Size: 8192 kB

Characteristics:

PCI is supported

PNP is supported

BIOS is upgradeable

BIOS shadowing is allowed

ESCD support is available

Boot from CD is supported

Selectable boot is supported

EDD is supported

5.25"/360 kB floppy services are supported (int 13h)

5.25"/1.2 MB floppy services are supported (int 13h)

3.5"/720 kB floppy services are supported (int 13h)

Print screen service is supported (int 5h)

8042 keyboard services are supported (int 9h)

Serial services are supported (int 14h)

Printer services are supported (int 17h)

CGA/mono video services are supported (int 10h)

ACPI is supported

USB legacy is supported

BIOS boot specification is supported

Function key-initiated network boot is supported

Targeted content distribution is supported

Firmware Revision: 1.51

|

-

VT-x/VT-d/HyperThreading

The new VMX releases requires HyperThreading to be enabled to support flow cache feature. The installation script will abort if HT is not enabled, see troubleshooting installation script for more detail of this issue. To install VMX, Either modifying the script to disable HT1 calculation/verification, or installing VMX manually.

According to "Minimum Hardware and Software Requirements":

-

For lab simulation and low performance (less than 100 Mbps) use cases, any x86 processor (Intel or AMD) with VT-d capability.

-

For all other use cases, Intel Ivy Bridge processors or later are required. Example of Ivy Bridge processor: Intel Xeon E5-2667 v2 @ 3.30 GHz 25 MB Cache

-

For single root I/O virtualization (SR-IOV) NIC type, use Intel 82599-based PCI-Express cards (10 Gbps) and Ivy Bridge processors.

These statements indicate some current implementation info:

-

lab simulation/low performance ⇒ virtio

-

all other use cases ⇒ high performance ⇒ SRIOV

-

"Ivy Bridge CPU" is required for VMX running SR-IOV

-

-

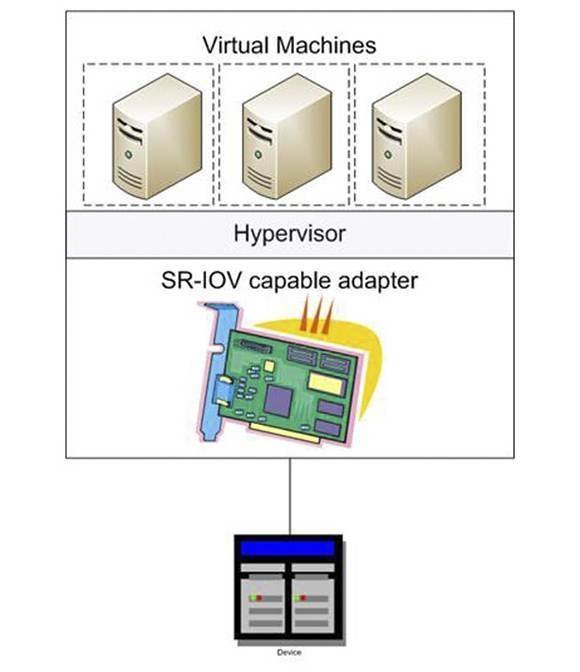

SR-IOV

1.2.2. enable iommu/VT-d

to enable VT-d we need to change kernel boot parameters. Here is the steps:

-

Make sure the

/etc/default/grubfile contains this line:GRUB_CMDLINE_LINUX_DEFAULT="intel_iommu=on"

-

If not, add it:

echo 'GRUB_CMDLINE_LINUX_DEFAULT="intel_iommu=on"' >> /etc/default/grub

So it looks like:

ping@trinity:~$ grep -i iommu /etc/default/grub GRUB_CMDLINE_LINUX_DEFAULT="intel_iommu=on"

-

Run

sudo update-grubto update grub -

reboot system to make it start with configured kernel parameters

sudo reboot

when using 'echo', be careful to use >> instead of >. > will

"overwrite" the whole file with whatever echoed, instead of "append". in case

that happens, correct it with

instruction here.

|

|

instead of checking the grub config file, looking at parameters passed to the kernel at the time it is started might be more accurate: ping@matrix:~$ cat /proc/cmdline BOOT_IMAGE=/boot/vmlinuz-3.19.0-25-generic.efi.signed root=UUID=875bb72c-c5de-4329-af48-55af85f26398 ro intel_iommu=on pci=realloc in this example, we are sure the current kernel has IOMMU enabled. |

In a system that didn’t enable IOMMU, after enabling it and restart, you will notice kernel logs similar to below captures:

ping@Compute24:~$ dmesg -T | grep -iE "iommu|dmar"

[Thu Dec 17 09:07:22 2015] Command line: BOOT_IMAGE=/vmlinuz-3.16.0-30-generic

root=/dev/mapper/Compute24--vg-root ro intel_iommu=on

crashkernel=384M-2G:64M,2G-16G:128M,16G-:256M

[Thu Dec 17 09:07:22 2015] ACPI: DMAR 0x00000000BDDAB840 000718 (v01 HP

ProLiant 00000001 \xffffffd2? 0000162E)

[Thu Dec 17 09:07:22 2015] Kernel command line:

BOOT_IMAGE=/vmlinuz-3.16.0-30-generic root=/dev/mapper/Compute24--vg-root ro

intel_iommu=on crashkernel=384M-2G:64M,2G-16G:128M,16G-:256M

[Thu Dec 17 09:07:22 2015] Intel-IOMMU: enabled

[Thu Dec 17 09:07:22 2015] dmar: Host address width 46

[Thu Dec 17 09:07:22 2015] dmar: DRHD base: 0x000000f34fe000 flags: 0x0

[Thu Dec 17 09:07:22 2015] dmar: IOMMU 0: reg_base_addr f34fe000 ver 1:0 cap d2078c106f0466 ecap f020de

[Thu Dec 17 09:07:22 2015] dmar: DRHD base: 0x000000f7efe000 flags: 0x0

[Thu Dec 17 09:07:22 2015] dmar: IOMMU 1: reg_base_addr f7efe000 ver 1:0 cap d2078c106f0466 ecap f020de

[Thu Dec 17 09:07:22 2015] dmar: DRHD base: 0x000000fbefe000 flags: 0x0

[Thu Dec 17 09:07:22 2015] dmar: IOMMU 2: reg_base_addr fbefe000 ver 1:0 cap d2078c106f0466 ecap f020de

[Thu Dec 17 09:07:22 2015] dmar: DRHD base: 0x000000ecffe000 flags: 0x1

[Thu Dec 17 09:07:22 2015] dmar: IOMMU 3: reg_base_addr ecffe000 ver 1:0 cap d2078c106f0466 ecap f020de

[Thu Dec 17 09:07:22 2015] dmar: RMRR base: 0x000000bdffd000 end: 0x000000bdffffff

......

[Thu Dec 17 09:07:22 2015] dmar: RMRR base: 0x000000bddde000 end: 0x000000bdddefff

[Thu Dec 17 09:07:22 2015] dmar: ATSR flags: 0x0

[Thu Dec 17 09:07:22 2015] IOAPIC id 12 under DRHD base 0xfbefe000 IOMMU 2

[Thu Dec 17 09:07:22 2015] IOAPIC id 11 under DRHD base 0xf7efe000 IOMMU 1

[Thu Dec 17 09:07:22 2015] IOAPIC id 10 under DRHD base 0xf34fe000 IOMMU 0

[Thu Dec 17 09:07:22 2015] IOAPIC id 8 under DRHD base 0xecffe000 IOMMU 3

[Thu Dec 17 09:07:22 2015] IOAPIC id 0 under DRHD base 0xecffe000 IOMMU 3

[Thu Dec 17 09:07:24 2015] IOMMU 2 0xfbefe000: using Queued invalidation

[Thu Dec 17 09:07:24 2015] IOMMU 1 0xf7efe000: using Queued invalidation

[Thu Dec 17 09:07:24 2015] IOMMU 0 0xf34fe000: using Queued invalidation

[Thu Dec 17 09:07:24 2015] IOMMU 3 0xecffe000: using Queued invalidation

[Thu Dec 17 09:07:24 2015] IOMMU: Setting RMRR:

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:01:00.0 [0xbddde000 - 0xbdddefff]

......

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:01:00.2 [0xbddde000 - 0xbdddefff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:01:00.4 [0xbddde000 - 0xbdddefff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:03:00.0 [0xe8000 - 0xe8fff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:03:00.1 [0xe8000 - 0xe8fff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:02:00.0 [0xe8000 - 0xe8fff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:02:00.1 [0xe8000 - 0xe8fff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:06:00.0 [0xe8000 - 0xe8fff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:06:00.1 [0xe8000 - 0xe8fff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:01:00.0 [0xe8000 - 0xe8fff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:01:00.2 [0xe8000 - 0xe8fff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:21:00.0 [0xe8000 - 0xe8fff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:21:00.1 [0xe8000 - 0xe8fff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:23:00.0 [0xe8000 - 0xe8fff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:23:00.1 [0xe8000 - 0xe8fff]

......

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:23:00.0 [0xbdf83000 - 0xbdf84fff]

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:23:00.1 [0xbdf83000 - 0xbdf84fff]

......

[Thu Dec 17 09:07:24 2015] IOMMU: Prepare 0-16MiB unity mapping for LPC

[Thu Dec 17 09:07:24 2015] IOMMU: Setting identity map for device 0000:00:1f.0 [0x0 - 0xffffff]

1.2.3. install required linux kernel for SR-IOV based VMX

linux kernel needs to be changed if VMX needs to be setup with SR-IOV.

currently the ixgbe coming with ubuntu does not work on VMX. The main issue is

lack of multicast support on ingress - packet received on a VF will be

discarded siliently and won’t be delivered into the guest VM, an example of the

immediate effect of this is that OSPF (and most of today’s IGP) neighborship

won’t come up. Therefore building VMX based on SR-IOV requires to re-compile

the ixgbe kernel driver from source code, which is provided by Juniper to fix

the multicast support. the code is available in the installation package. At

the time of the writing of this document there is problem to compile ixgbe from

source code under any kernels other than 3.13.0-32-generic. that’s why the

kernel needs to be changed in this setup.

There is a statement about this issue in the VMX document:

Modified IXGBE drivers are included in the package. Multicast promiscuous mode for Virtual Functions is needed to receive control traffic that comes with broadcast MAC addresses. The reference driver does not come with this mode set, so the IXGBE drivers in this package contain certain modifications to overcome this limitation.

| there is a plan to make ixgbe kernel module to work with newer kernel in new VMX release. |

use below commands (provided in VMX installation doc) to change kernel:

sudo apt-get install linux-firmware linux-image-3.13.0.32-generic \

linux-image-extra-3.13.0.32-generic \

linux-headers-3.13.0.32-generic

After changing the kernel for the next reboot, you may want to make it default kernel for later reboot. To achieve that following below steps:

in file /boot/grub/grub.cfg locate this line:

menuentry 'Ubuntu, with Linux 3.13.0-32-generic'

then move it before the first menuentry entry:

1 export linux_gfx_mode

2 #<------move to here

3 menuentry 'Ubuntu' --class ubuntu - ....save and reboot the server.

| this is optional because otherwise you will still be given a change to select kernel version during system reboot. |

1.2.4. install required software packages

Use below commands (provided in VMX installation doc) to install required software packages:

1sudo apt-get update

2sudo apt-get install bridge-utils qemu-kvm libvirt-bin python numactl \

3 python-netifaces vnc4server libyaml-dev python-yaml\

4 libparted0-dev libpciaccess-dev libnuma-dev libyajl-dev\

5 libxml2-dev libglib2.0-dev libnl-dev libnl-dev python-pip\

6 python-dev libxml2-dev libxslt-dev|

a quick way to verify if all/any of the required software package in the list were installed correctly or not, is to simply re-run the above installation commands again. If everything got installed correctly then you will get sth like this: |

1.2.5. libvirt

make sure libvirt version 1.2.8 is installed for "performance version" of VMX. refer to the VMX document for detail steps to install it from source code.

In below command captures we demonstrate the libvirt upgrading process.

-

original libvirt coming with ubuntu14.02:

1ping@ubuntu:~$ libvirtd --version 2libvirtd (libvirt) 1.2.2 -

download and prepare source code:

1cd /tmp 2wget http://libvirt.org/sources/libvirt-1.2.8.tar.gz 3tar zxvf libvirt-1.2.8.tar.gz -

stop and uninstall old version:

1cd libvirt-1.2.8 2sudo ./configure --prefix=/usr/local --with-numactl 3 4 checking for a BSD-compatible install... /usr/bin/install -c 5 checking whether build environment is sane... yes 6 checking for a thread-safe mkdir -p... /bin/mkdir -p 7 checking for gawk... gawk 8 checking whether make sets $(MAKE)... yes 9 ...... 10 11sudo service libvirt-bin stop 12 libvirt-bin stop/waiting 13 14sudo make uninstall 15 Making uninstall in . 16 make[1]: Entering directory `/tmp/libvirt-1.2.8' 17 make[1]: Leaving directory `/tmp/libvirt-1.2.8' 18 ...... 19 20/bin/rm rf /usr/local/lib/libvirt*in/rm: 21 cannot remove ‘/usr/local/lib/libvirt*’: No such file or directory -

install new version:

1sudo ./configure --prefix=/usr --localstatedir=/ --with-numactl 2 checking for a BSD-compatible install... /usr/bin/install -c 3 checking whether build environment is sane... yes 4 checking for a thread-safe mkdir -p... /bin/mkdir -p 5...... 6 7sudo make 8 make all-recursive 9 make[1]: Entering directory `/tmp/libvirt-1.2.8' 10 Making all in . 11 make[2]: Entering directory `/tmp/libvirt-1.2.8' 12 make[2]: Leaving directory `/tmp/libvirt-1.2.8' 13 Making all in gnulib/lib 14 make[2]: Entering directory `/tmp/libvirt-1.2.8/gnulib/lib' 15 GEN alloca.h 16 GEN c++defs.h 17 GEN warn-on-use.h 18 GEN arg-nonnull.h 19 GEN arpa/inet.h 20 ...... 21 22sudo make install 23 Making install in . 24 make[1]: Entering directory `/tmp/libvirt-1.2.8' 25 make[2]: Entering directory `/tmp/libvirt-1.2.8' 26 ...... -

start new version:

1ping@ubuntu:/tmp/libvirt-1.2.8$ sudo service libvirt-bin start 2libvirt-bin start/running, process 24450 3ping@ubuntu:/tmp/libvirt-1.2.8$ ps aux| grep libvirt 4 5ping@ubuntu:/tmp/libvirt-1.2.8$ ps aux| grep libvirt 6root 24450 0.5 0.0 405252 10772 ? Sl 21:40 0:00 /usr/sbin/libvirtd -d -

verify the new version:

1ping@trinity:~$ libvirtd --version 2libvirtd (libvirt) 1.2.8 3 4ping@trinity:~$ service libvirt-bin status 5libvirt-bin start/running, process 1559 6 7ping@trinity:~$ which libvirtd 8/usr/sbin/libvirtd 9 10ping@trinity:~$ /usr/sbin/libvirtd --version 11/usr/sbin/libvirtd (libvirt) 1.2.8 12 13ping@trinity:~$ virsh --version 141.2.8 15 16ping@trinity:/images/vmx_20151102.0$ sudo virsh -c qemu:///system version 17Compiled against library: libvirt 1.2.8 18Using library: libvirt 1.2.8 19Using API: QEMU 1.2.8 20Running hypervisor: QEMU 2.0.0

1.3. download vmx installation package

download VMX tarball from internal or public server.

-

internal server:

1pings@svl-jtac-tool02:/volume/publish/dev/wrlinux/mx86/15.1F_att_drop$ ls -l

2total 4180932

3-rw-rw-r-- 1 rbu-builder rbu-builder 816737275 Nov 9 12:23 vmx_20151102.0.tgz #<------

4-rw-rw-r-- 1 rbu-builder rbu-builder 3447736320 Nov 4 12:18 vmx_20151102.0_tarball_issue.tgzTo have a quick look at the guest image files included in the tarball:

pings@svl-jtac-tool02:~$ cd /volume/publish/dev/wrlinux/mx86/15.1F_att_drop/ pings@svl-jtac-tool02:~$ tar tf vmx_20151102.0.tgz | grep images vmx_20151102.0/images/ vmx_20151102.0/images/jinstall64-vmx-15.1F-20151104.0-domestic.img (1) vmx_20151102.0/images/vFPC-20151102.img (2) vmx_20151102.0/images/vmxhdd.img (3) vmx_20151102.0/images/metadata_usb.img

| 1 | jinstall image, vRE/VCP (Virtual Control Plane) VM image |

| 2 | vFPC image, vFPC/VFP (Virtual Forwarding Plane) VM image |

| 3 | hdd image, vRE virtual harddisk image |

when downloaded from Juniper internal server, the jinstall image (vRE

guest VM) may or may not be included in the VMX tarball. it is available in the

normal Junos releases archives. tarball downloaded from public URL will always

include jinstall and all other necessary images.

|

If the tarball does not contain a vRE jinstall image, we can download the image from other places and copy it over to the same "images" folder after untar the VMX tarball.

1pings@svl-jtac-tool02:/volume/build/junos/15.1F/daily/20151102.0/ship$

2ls -l | grep install64 | grep vmx

31 builder 748510583 jinstall64-vmx-15.1F-20151102.0-domestic-signed.tgz

41 builder 33 jinstall64-vmx-15.1F-20151102.0-domestic-signed.tgz.md5

51 builder 41 jinstall64-vmx-15.1F-20151102.0-domestic-signed.tgz.sha1

61 builder 1005715456 jinstall64-vmx-15.1F-20151102.0-domestic.img #<----

71 builder 33 jinstall64-vmx-15.1F-20151102.0-domestic.img.md5

81 builder 41 jinstall64-vmx-15.1F-20151102.0-domestic.img.sha1

91 builder 748226059 jinstall64-vmx-15.1F-20151102.0-domestic.tgz

101 builder 33 jinstall64-vmx-15.1F-20151102.0-domestic.tgz.md5

111 builder 41 jinstall64-vmx-15.1F-20151102.0-domestic.tgz.sha1

There is no guarantee that an arbitrary combination of jinstall and

vFPC images can work together or not. The publicly released VMX packages

should already include the tested and working combination. Read the offical

instruction and document coming with the software release before starting to

install the VMX.

|

1.4. prepare a "work folder" for the installation

in my server I organize folder/files in this structure:

1/virtualization (1)

2├── images (2)

3│ ├── ubuntu.img (3)

4│ ├── vmx_20151102.0 (3)

5│ │ ├── build

6│ │ │ └── vmx1

7│ │ │ ├── images

8│ │ │ ├── logs

9│ │ │ │ └── vmx_1448293071.log

10│ │ │ └── xml

11│ │ ├── config

12│ │ │ ├── samples

13│ │ │ │ ├── vmx.conf.sriov

14│ │ │ │ ├── vmx.conf.virtio

15│ │ │ │ └── vmx-galaxy.conf

16│ │ │ ├── vmx.conf (6)

17│ │ │ ├── vmx.conf.sriov1 (6)

18│ │ │ ├── vmx.conf.sriov2 (6)

19│ │ │ ├── vmx.conf.ori

20│ │ │ └── vmx-junosdev.conf

21│ │ ├── docs

22

23...<snipped>...

24

25│ └── vmx_20151102.0.tgz (3)

26├── vmx1 (4)

27│ ├── br-ext-generated.xml \

28│ ├── br-int-generated.xml |

29│ ├── cpu_affinitize.sh | (5)

30│ ├── vfconfig-generated.sh |

31│ ├── vPFE-generated.xml |

32│ ├── vRE-generated.xml /

33│ └── vmxhdd.img (7)

34└── vmx2 (4)

35 ├── br-ext-generated.xml \

36 ├── br-int-generated.xml |

37 ├── cpu_affinitize.sh | (5)

38 ├── vfconfig-generated.sh |

39 ├── vPFE-generated.xml |

40 ├── vRE-generated.xml /

41 └── vmxhdd.img (7)

42

4327 directories, 136 files| 1 | parent folder to hold all virtualization files/releases/images |

| 2 | "images" sub-folder holds all images for virtualizations |

| 3 | VM "images", installation files, tarballs, etc |

| 4 | sub-folder to hold all files for installation of multiple VMX VM instances |

| 5 | files generated by installation scripts |

| 6 | VMX installation configuration file |

| 7 | "hard disk" image file, holding all current VMX guest VM configs |

2. install VMX using installation script (SR-IOV)

Included in the tarball there is an orchestration script to automate the VMX setup in the server. running this script without any parameter will provide a quick help of the usage.

1ping@trinity:/virtualization/images/vmx_20151102.0$ sudo ./vmx.sh

2

3Usage: vmx.sh [CONTROL OPTIONS]

4 vmx.sh [LOGGING OPTIONS] [CONTROL OPTIONS]

5 vmx.sh [JUNOS-DEV BIND OPTIONS]

6 vmx.sh [CONSOLE LOGIN OPTIONS]

7

8 CONTROL OPTIONS:

9 --install : Install And Start vMX

10 --start : Start vMX

11 --stop : Stop vMX

12 --restart : Restart vMX

13 --status : Check Status Of vMX

14 --cleanup : Stop vMX And Cleanup Build Files

15 --cfg <file> : Override With The Specified vmx.conf File

16 --env <file> : Override With The Specified Environment .env File

17 --build <directory> : Override With The Specified Directory for Temporary Files

18 --help : This Menu

19

20 LOGGING OPTIONS:

21 -l : Enable Logging

22 -lv : Enable Verbose Logging

23 -lvf : Enable Foreground Verbose Logging

24

25 JUNOS-DEV BIND OPTIONS:

26 --bind-dev : Bind Junos Devices

27 --unbind-dev : Unbind Junos Devices

28 --bind-check : Check Junos Device Bindings

29 --cfg <file> : Override With The Specified vmx-junosdev.conf File

30

31 CONSOLE LOGIN OPTIONS:

32 --console [vcp|vfp] [vmx_id] : Login to the Console of VCP/VFP

33

34 VFP Image OPTIONS:

35 --vfp-info <VFP Image Path> : Display Information About The Specified vFP image

36

37Copyright(c) Juniper Networks, 20152.1. the vmx.conf file

The config file vmx.conf is a centralized place where all of the installation

parameters and options are defined. The installation script vmx.sh scan this

file as input and generate XML files and shell scripts as output. the

generated XML files will be read by libvirt/virsh tool later as input

information to setup and manipulate the VMs.

The generated shell script will be executed to configure:

-

vcpu pinning (both SRIOV and VIRTIO)

-

VF properties (SRIOV only)

For better readability vmx.conf is implemented in YAML format. However, in

VMX the guest VM instances are manipulated via libvirt, which currently solely

relies on XML. This is why there are 2 software modules in the pre-installed

packages to support YAML to XML conversion:

-

libyaml-dev Fast YAML 1.1 parser and emitter library (development)

-

python-yaml YAML parser and emitter for Python

|

Important tips about YAML:

In short, every space/indentation matters. Taking cautions when modifying the

|

vmx.conf template coming with the VMX tarball looks :files

1ping@trinity:/virtualization/images/vmx_20151102.0/config$ cat vmx.conf

2##############################################################

3#

4# vmx.conf

5# Config file for vmx on the hypervisor.

6# Uses YAML syntax.

7# Leave a space after ":" to specify the parameter value.

8#

9##############################################################

10

11---

12#Configuration on the host side - management interface, VM images etc.

13HOST: (1)

14 identifier : vmx1 # Maximum 4 characters (2)

15 host-management-interface : eth0 (3)

16 routing-engine-image : "/home/vmx/vmxlite/images/jinstall64-vmx.img"(4)

17 routing-engine-hdd : "/home/vmx/vmxlite/images/vmxhdd.img" (4)

18 forwarding-engine-image : "/home/vmx/vmxlite/images/vPFE.img" (4)

19

20---

21#External bridge configuration (5)

22BRIDGES:

23 - type : external

24 name : br-ext # Max 10 characters

25

26---

27#vRE VM parameters (6)

28CONTROL_PLANE:

29 vcpus : 1

30 memory-mb : 1024

31 console_port: 8601

32

33 interfaces :

34 - type : static

35 ipaddr : 10.102.144.94

36 macaddr : "0A:00:DD:C0:DE:0E"

37

38---

39#vPFE VM parameters (7)

40FORWARDING_PLANE:

41 memory-mb : 6144

42 vcpus : 3

43 console_port: 8602

44 device-type : virtio (8)

45

46 interfaces :

47 - type : static

48 ipaddr : 10.102.144.98

49 macaddr : "0A:00:DD:C0:DE:10"

50

51---

52#Interfaces (9)

53JUNOS_DEVICES:

54 - interface : ge-0/0/0

55 mac-address : "02:06:0A:0E:FF:F0"

56 description : "ge-0/0/0 interface"

57

58 - interface : ge-0/0/1

59 mac-address : "02:06:0A:0E:FF:F1"

60 description : "ge-0/0/0 interface"

61

62 - interface : ge-0/0/2

63 mac-address : "02:06:0A:0E:FF:F2"

64 description : "ge-0/0/0 interface"

65

66 - interface : ge-0/0/3

67 mac-address : "02:06:0A:0E:FF:F3"

68 description : "ge-0/0/0 interface"| 1 | "HOST" config section. |

| 2 | ID of the vmx instance. this string will be encoded into the final VM name: vcp-<ID> or vfp-<ID> . |

| 3 | current management interface of the server. the installation script will "move" the IP/MAC property from this port to an external bridge named "br-ext". |

| 4 | vRE/vFPC/Harddisk VM images location. |

| 5 | external bridge configuration section: a bridge utility, named br-ext,

will be created, for managment connection from/to the external networks. |

| 6 | vRE configuration section: this template uses 1 vCPU, 1G mem, console port

8601 to start vRE guest VM. the VM mgmt interface’s "peer interface"

[1]from the host - vcp_ext-vmx1 ("attached" to fxp0 port from inside the

guest VM), will be configured with the specified MAC address. the corresponding

fxp0 interface from inside of vRE VM will inherit same MAC from it.

[2] |

| 7 | vFPC configuration section: this template uses 3 vCPU, 6G mem, console port

8602 to build vFPC guest VM. the VM mgmt interface’s "peer interface"

[1] from the host - vfp_ext-vmx1 ("attached" to

ext port from side the guest VM) will be configured with the specified MAC

address. the ext interface from inside the vFPC guest VM will inherit same

MAC from it. |

| 8 | as a KVM implementation, VMX currently supports two type of network IO

virtualization : VT-d + SRIOV, or VIRTIO. this config knob device-type

will determine which IO virtualization technology will be used to build VMX.

This template uses "virtio" IO virtualization. |

| 9 | VMX router interface configuration section: This is where the router

ge-0/0/z properties can be configured. depending on device-type value the

available configurable properties will be different. Since this template uses

"virtio" virtualization, only "mac-address" is configurable. more details will

be covered in later sections of this doc. |

2.2. assigning MAC address

the "virtual" MX will use virual NIC running inside the guest VM. so unlike any real NIC coming with a built-in MAC address which is globally uniquely assigned, the MAC address of "vNIC" is what you assigned before or after the VMX instances were brought up.

To avoid confliction to other external devices, at least these below MAC addresses needs to be unique in the diagram:

-

MAC for ge-0/0/z

-

fxp0 on VRE(VCP)

-

eth1 or ext on VPFE(VFP)

|

VFP VM interface name changed in new VMX release.

|

These MAC addresses will exit the server and be learnt by the external device.

Below are internal / isolated interfaces which never communicate with external devices, MAC address doesn’t matter for these interfaces:

-

em1 on VRE

-

eth0 or int on VPFE

"Locally Administered MAC Address" is good candidate to be used in lab test environment:

x2-xx-xx-xx-xx-xx x6-xx-xx-xx-xx-xx xA-xx-xx-xx-xx-xx xE-xx-xx-xx-xx-xx

In my setup I follow this simply rule below to avoid MAC address confliction with other systems in the lab network:

mac-address : "02:04:17:01:02:02"

- ----- -- -- --

| | | | |

| | | | (5)

| | | (4)

| | (3)

| (2)

(1)

| 1 | locally administered MAC address |

| 2 | last 4 digits of IP (e.g. x.y.4.17) or MAC of management interface. |

| 3 | VMX instance number, first VMX instance uses 01, second uses 02, etc. |

| 4 | 01 for control plane interface (fxp0 for VCP, ext for VFP) of a VM,

02 for forwarding plane interface (ge-0/0/z) of a VM |

| 5 | assign a unique number for each type of interface |

VMX instance1 |

vRE fxp0 |

02:04:17:01:01:01 |

vFPC ext |

02:04:17:01:01:02 |

|

ge-0/0/0 |

02:04:17:01:02:01 |

|

ge-0/0/1 |

02:04:17:01:02:02 |

|

VMX instance2 |

vRE fxp0 |

02:04:17:02:01:01 |

vFPC ext |

02:04:17:02:01:02 |

|

ge-0/0/0 |

02:04:17:02:02:01 |

|

ge-0/0/1 |

02:04:17:02:02:02 |

2.3. modify the vmx.conf (SR-IOV)

The config file needs to be changed according to the installation plan. this is vmx.conf file that I use to setup SR-IOV based VMX.

1ping@trinity:/virtualization/images/vmx_20151102.0/config$ cat vmx.conf

2##############################################################

3#

4# vmx.conf

5# Config file for vmx on the hypervisor.

6# Uses YAML syntax.

7# Leave a space after ":" to specify the parameter value.

8#

9##############################################################

10

11---

12#Configuration on the host side - management interface, VM images etc.

13HOST:

14 identifier : vmx1 # Maximum 4 characters

15 host-management-interface : em1

16 routing-engine-image : "/virtualization/images/vmx_20151102.0/images/jinstall64-vmx-15.1F-20151104.0-domestic.img"

17 routing-engine-hdd : "/virtualization/images/vmx_20151102.0/vmx1/vmxhdd.img"

18 forwarding-engine-image : "/virtualization/images/vmx_20151102.0/images/vFPC-20151102.img"

19

20---

21#External bridge configuration

22BRIDGES:

23 - type : external

24 name : br-ext # Max 10 characters

25

26---

27#vRE VM parameters

28CONTROL_PLANE:

29 vcpus : 1

30 memory-mb : 2048

31 console_port: 8816

32

33 interfaces :

34 - type : static

35 ipaddr : 10.85.4.105

36 macaddr : "02:04:17:01:01:01"

37---

38#vPFE VM parameters

39FORWARDING_PLANE:

40 memory-mb : 16384

41 vcpus : 4 (1)

42 console_port: 8817

43 device-type : sriov

44

45 interfaces :

46 - type : static

47 ipaddr : 10.85.4.106

48 macaddr : "02:04:17:01:01:02"

49

50---

51#Interfaces

52JUNOS_DEVICES:

53 - interface : ge-0/0/0

54 port-speed-mbps : 10000 (2)

55 nic : p3p1 (2)

56 mtu : 2000 # DO NOT EDIT (2)

57 virtual-function : 0 (2)

58 mac-address : "02:04.17:01:02:01"

59 description : "ge-0/0/0 connects to eth6"

60

61 - interface : ge-0/0/1

62 port-speed-mbps : 10000 (2)

63 nic : p2p1 (2)

64 mtu : 2000 # DO NOT EDIT

65 virtual-function : 0 (2)

66 mac-address : "02:04.17:01:02:02"

67 description : "ge-0/0/1 connects to eth7"| 1 | assign 4 CPUs to vPFE VM |

| 2 | SR-IOV only options |

-

my server’s mgmt interface name is

em1 -

vRE and vFPC images location will be just the images folder from the untar.ed installation package. these images can then be shared by all VMX instances.

-

virtual harddisk image

vmxhdd.imgwill be in a seperate folder created specifically for current VMX instance -

use console port 88x6 for vRE guest VM and 88x7 for vFPC guest VM, where x = instance number. Any other number which is not yet in use is fine.

-

MAC addresses are allocated following the above mentioned rule

-

for SR-IOV virtualization, these link properties need to be configured:

-

physical NIC name

-

VF number

-

MAC address

-

link speed

-

MTU

-

for virtio virtualization, only MAC address can be defined in the config

file. the MTU can be defined in a seperate file vmx-junosdev.conf.

|

|

In this example only 4 CPUs were assigned to vPFE VM, which is less than what is required for full performance in production environment ("performance mode"). In VMX 15.1, recommended number of CPU for "performance mode" are calculated as following:

non-performance mode or "lite" mode requires less CPU - minimum 3 CPU is fine to bring up the VFP. Here in lab environment, for test/study purpose I was able to bring up 2 interfaces SR-IOV VMX with totally 5 CPUs - 1 for VCP and 4 for VFP. |

2.4. run the installation script: SR-IOV

After modification of vmx.conf file, we can run the vmx.sh script to setup

the VMX.

1ping@trinity:/virtualization/images/vmx_20151102.0$ sudo ./vmx.sh --install

2==================================================

3 Welcome to VMX

4==================================================

5Date..............................................11/24/15 21:18:36

6VMX Identifier....................................vmx1

7Config file......................................./virtualization/images/vmx_20151102.0/config/vmx.conf

8Build Directory.................................../virtualization/images/vmx_20151102.0/build/vmx1

9Environment file................................../virtualization/images/vmx_20151102.0/env/ubuntu_sriov.env

10Junos Device Type.................................sriov

11Initialize scripts................................[OK]

12Copy images to build directory....................[OK]

13==================================================

14 VMX Environment Setup Completed

15==================================================

16==================================================

17 VMX Install & Start

18==================================================

19Linux distribution................................ubuntu

20Intel IOMMU status................................[Enabled]

21Verify if GRUB needs reboot.......................[No]

22Installation status of qemu-kvm...................[OK]

23Installation status of libvirt-bin................[OK]

24Installation status of bridge-utils...............[OK]

25Installation status of python.....................[OK]

26Installation status of libyaml-dev................[OK]

27Installation status of python-yaml................[OK]

28Installation status of numactl....................[OK]

29Installation status of libnuma-dev................[OK]

30Installation status of libparted0-dev.............[OK]

31Installation status of libpciaccess-dev...........[OK]

32Installation status of libyajl-dev................[OK]

33Installation status of libxml2-dev................[OK]

34Installation status of libglib2.0-dev.............[OK]

35Installation status of libnl-dev..................[OK]

36Check Kernel version..............................[OK]

37Check Qemu version................................[OK]

38Check libvirt version.............................[OK]

39Check virsh connectivity..........................[OK]

40Check IXGBE drivers...............................[OK]

41==================================================

42 Pre-Install Checks Completed

43==================================================

44Check for VM vcp-vmx1.............................[Running]

45Shutdown vcp-vmx1.................................[OK]

46Check for VM vfp-vmx1.............................[Running]

47Shutdown vfp-vmx1.................................[OK]

48Cleanup VM states.................................[OK]

49Check if bridge br-ext exists.....................[Yes]

50Get Configured Management Interface...............em1

51Find existing management gateway..................br-ext

52Mgmt interface needs reconfiguration..............[Yes]

53Gateway interface needs change....................[Yes]

54Check if br-ext has valid IP address..............[Yes]

55Get Management Address............................10.85.4.17

56Get Management Mask...............................255.255.255.128

57Get Management Gateway............................10.85.4.1

58Del em1 from br-ext...............................[OK]

59Configure em1.....................................[Yes]

60Cleanup VM bridge br-ext..........................[OK]

61Cleanup VM bridge br-int-vmx1.....................[OK]

62Cleanup IXGBE drivers.............................[OK]

63==================================================

64 VMX Stop Completed

65==================================================

66Check VCP image...................................[OK]

67Check VFP image...................................[OK]

68VMX Model.........................................FPC

69Check VCP Config image............................[OK]

70Check management interface........................[OK]

71Check interface p3p1..............................[OK]

72Check interface p2p1..............................[OK]

73Setup huge pages to 32768.........................[OK]

74Number of Intel 82599 NICs........................8

75Configuring Intel 82599 Adapters for SRIOV........[OK]

76Number of Virtual Functions created...............[OK]

77Attempt to kill libvirt...........................[OK]

78Attempt to start libvirt..........................[OK]

79Sleep 2 secs......................................[OK]

80Check libvirt support for hugepages...............[OK]

81==================================================

82 System Setup Completed

83==================================================

84Get Management Address of em1.....................[OK]

85Generate libvirt files............................[OK]

86Sleep 2 secs......................................[OK]

87Configure virtual functions for SRIOV.............[OK]

88Find configured management interface..............em1

89Find existing management gateway..................em1

90Check if em1 is already enslaved to br-ext........[No]

91Gateway interface needs change....................[Yes]

92Create br-ext.....................................[OK]

93Get Management Gateway............................10.85.4.1

94Flush em1.........................................[OK]

95Start br-ext......................................[OK]

96Bind em1 to br-ext................................[OK]

97Get Management MAC................................38:ea:a7:37:7c:54

98Assign Management MAC 38:ea:a7:37:7c:54...........[OK]

99Add default gw 10.85.4.1..........................[OK]

100Create br-int-vmx1................................[OK]

101Start br-int-vmx1.................................[OK]

102Check and start default bridge....................[OK]

103Define vcp-vmx1...................................[OK]

104Define vfp-vmx1...................................[OK]

105Wait 2 secs.......................................[OK]

106Start vcp-vmx1....................................[OK]

107Start vfp-vmx1....................................[OK]

108Wait 2 secs.......................................[OK]

109Perform CPU pinning...............................[OK]

110==================================================

111 VMX Bringup Completed

112==================================================

113Check if br-ext is created........................[Created]

114Check if br-int-vmx1 is created...................[Created]

115Check if VM vcp-vmx1 is running...................[Running]

116Check if VM vfp-vmx1 is running...................[Running]

117Check if tap interface vcp_ext-vmx1 exists........[OK]

118Check if tap interface vcp_int-vmx1 exists........[OK]

119Check if tap interface vfp_ext-vmx1 exists........[OK]

120Check if tap interface vfp_int-vmx1 exists........[OK]

121==================================================

122 VMX Status Verification Completed.

123==================================================

124Log file........................................../dev/null

125==================================================

126 Thankyou for using VMX

127==================================================2.5. quick verification

After the installation we need to know if the installed VMX is working well.

-

list running VMs: vRE and vFPC

ping@trinity:~$ sudo virsh list [sudo] password for ping: Id Name State ---------------------------------------------------- 2 vcp-vmx1 running 3 vfp-vmx1 running

-

login to vRE

1ping@trinity:~$ telnet localhost 8816 2Trying ::1... 3Trying 127.0.0.1... 4Connected to localhost. 5Escape character is '^]'. 6 7Amnesiac (ttyd0) 8 9login: root 10root@% cli 11root> -

verify if virtual PFE is "online"

1labroot> show chassis fpc 2 Temp CPU Utilization (%) CPU Utilization (%) Memory Utilization (%) 3Slot State (C) Total Interrupt 1min 5min 15min DRAM (MB) Heap Buffer 4 0 Online Testing 3 0 3 3 2 1 6 0 5 6labroot> show chassis fpc pic-status 7Slot 0 Online Virtual FPC 8 PIC 0 Online VirtualThis may take a couple of minutes.

-

login to vPFE

root@trinity:~# telnet localhost 8817 Trying ::1... Trying 127.0.0.1... Connected to localhost. Escape character is '^]'. Wind River Linux 6.0.0.12 vfp-vmx1 console vfp-vmx1 login: pfe Password: pfe@vfp-vmx1:~$ cat /etc/issue.net Wind River Linux 6.0.0.12 %h

|

there are two built-in account to login vfp yocto VM:

|

Later we’ll demonstrate ping and OSPF neighborship test. A more intensive verification requires to enable more features and protocols (OSPF/BGP/multicast/etc), which is beyond the scope of this doc.

2.6. uninstall (cleanup) VMX with installation script

To uninstall VMX just run the same script with --cleanup option:

ping@trinity:/virtualization/images/vmx_20151102.0$ sudo ./vmx.sh --cleanup

==================================================

Welcome to VMX

==================================================

Date..............................................11/24/15 21:25:41

VMX Identifier....................................vmx1

Config file......................................./virtualization/images/vmx_20151102.0/config/vmx.conf

Build Directory.................................../virtualization/images/vmx_20151102.0/build/vmx1

Environment file................................../virtualization/images/vmx_20151102.0/env/ubuntu_sriov.env

Junos Device Type.................................sriov

Initialize scripts................................[OK]

==================================================

VMX Environment Setup Completed

==================================================

==================================================

VMX Stop & Cleanup

==================================================

Check if vMX is running...........................[Yes]

Check for VM vcp-vmx1.............................[Running]

Shutdown vcp-vmx1.................................[OK]

Check for VM vfp-vmx1.............................[Running]

Shutdown vfp-vmx1.................................[OK]

Cleanup VM states.................................[OK]

Check if bridge br-ext exists.....................[Yes]

Get Configured Management Interface...............em1

Find existing management gateway..................br-ext

Mgmt interface needs reconfiguration..............[Yes]

Gateway interface needs change....................[Yes]

Check if br-ext has valid IP address..............[Yes]

Get Management Address............................10.85.4.17

Get Management Mask...............................255.255.255.128

Get Management Gateway............................10.85.4.1

Del em1 from br-ext...............................[OK]

Configure em1.....................................[Yes]

Cleanup VM bridge br-ext..........................[OK]

Cleanup VM bridge br-int-vmx1.....................[OK]

Cleanup IXGBE drivers.............................[OK]

==================================================

VMX Stop Completed

==================================================

Cleanup auto-generated files......................[OK]

==================================================

VMX Cleanup Completed

==================================================

Log file........................................../dev/null

==================================================

Thankyou for using VMX

==================================================

If a different vmx config file was specified when installing VMX, specify the same as parameter of the script to clean up VMX.

ping@trinity:/virtualization/images/vmx_20151102.0$ sudo ./vmx.sh --install --cfg config/vmx.conf.sriov.1 ping@trinity:/virtualization/images/vmx_20151102.0$ sudo ./vmx.sh --cleanup --cfg config/vmx.conf.sriov.1

3. install VMX using installation script (VIRTIO)

3.1. modify the vmx.conf (virtio)

Most configuration parameters in vmx.conf config file to setup VIRTIO

version of VMX are the same as the one used to setup SRIOV VMX as shown

above, a few differences are:

-

for virtio virtualization, only MAC address can be defined in this config file

-

MTU can to be configured in a seperate

junosdev.confconfig file -

virtiotechnology generates seperated virtual NICs that are used to build VMX; all L1 properties remains in the physical NIC. therefore no need to specify L1 properties like physical NIC, VF, link speed, etc for VIRTIO setup. -

To communicate with external networks, virtio virtual NIC can be "bound" to a physical NIC port, or another virtual NIC, using any existing technologies like linux bridge, OVS, etc.

1ping@trinity:/virtualization/images/vmx_20151102.0/config$ cat vmx.conf.virtio.1

2##############################################################

3#

4# vmx.conf

5# Config file for vmx on the hypervisor.

6# Uses YAML syntax.

7# Leave a space after ":" to specify the parameter value.

8#

9##############################################################

10

11---

12#Configuration on the host side - management interface, VM images etc.

13HOST:

14 identifier : vmx1 # Maximum 4 characters

15 host-management-interface : em1

16 routing-engine-image : "/virtualization/images/vmx_20151102.0/images/jinstall64-vmx-15.1F-20151104.0-domestic.img"

17 routing-engine-hdd : "/virtualization/images/vmx_20151102.0/vmx1/vmxhdd.img"

18 forwarding-engine-image : "/virtualization/images/vmx_20151102.0/images/vFPC-20151102.img"

19

20---

21#External bridge configuration

22BRIDGES:

23 - type : external

24 name : br-ext # Max 10 characters

25

26---

27#vRE VM parameters

28CONTROL_PLANE:

29 vcpus : 1

30 memory-mb : 2048

31 console_port: 8816

32

33 interfaces :

34 - type : static

35 ipaddr : 10.85.4.105

36 macaddr : "02:04:17:01:01:01"

37 #macaddr : "0A:00:DD:C0:DE:0E"

38---

39#vPFE VM parameters

40FORWARDING_PLANE:

41 memory-mb : 4096

42 vcpus : 3

43 console_port: 8817

44 device-type : virtio

45

46 interfaces :

47 - type : static

48 ipaddr : 10.85.4.106

49 macaddr : "02:04:17:01:01:02"

50 #macaddr : "0A:00:DD:C0:DE:10"

51

52---

53#Interfaces

54JUNOS_DEVICES:

55 - interface : ge-0/0/0

56 mac-address : "02:04:17:01:02:01"

57 description : "ge-0/0/0 connects to eth6"

58

59 - interface : ge-0/0/1

60 mac-address : "02:04:17:01:02:02"

61 description : "ge-0/0/1 connects to eth7"3.2. run the installation script: virtio

After modification of vmx config file, we can run the same vmx.sh script to

setup the VMX. This time we use another option: --cfg, to tell the script

where the configuration file can be found. This is necessary if we defined a

seperate config file other than the default config/vmx.conf.

1ping@trinity:/virtualization/images/vmx_20151102.0$ sudo ./vmx.sh --install --cfg config/vmx.conf.virtio.1

2==================================================

3 Welcome to VMX

4==================================================

5Date..............................................11/30/15 21:40:16

6VMX Identifier....................................vmx1

7Config file.......................................

8 /virtualization/images/vmx_20151102.0/config/vmx.conf.virtio.1

9Build Directory.................................../virtualization/images/vmx_20151102.0/build/vmx1

10Environment file................................../virtualization/images/vmx_20151102.0/env/ubuntu_virtio.env

11Junos Device Type.................................virtio

12Initialize scripts................................[OK]

13Copy images to build directory....................[OK]

14==================================================

15 VMX Environment Setup Completed

16==================================================

17==================================================

18 VMX Install & Start

19==================================================

20Linux distribution................................ubuntu

21Check GRUB........................................[Disabled]

22Installation status of qemu-kvm...................[OK]

23Installation status of libvirt-bin................[OK]

24Installation status of bridge-utils...............[OK]

25Installation status of python.....................[OK]

26Installation status of libyaml-dev................[OK]

27Installation status of python-yaml................[OK]

28Installation status of numactl....................[OK]

29Installation status of libnuma-dev................[OK]

30Installation status of libparted0-dev.............[OK]

31Installation status of libpciaccess-dev...........[OK]

32Installation status of libyajl-dev................[OK]

33Installation status of libxml2-dev................[OK]

34Installation status of libglib2.0-dev.............[OK]

35Installation status of libnl-dev..................[OK]

36Check Kernel Version..............................[Disabled]

37Check Qemu Version................................[Disabled]

38Check libvirt Version.............................[Disabled]

39Check virsh connectivity..........................[OK]

40IXGBE Enabled.....................................[Disabled]

41==================================================

42 Pre-Install Checks Completed

43==================================================

44Check for VM vcp-vmx1.............................[Not Running]

45Check for VM vfp-vmx1.............................[Not Running]

46Cleanup VM states.................................[OK]

47Check if bridge br-ext exists.....................[No]

48Cleanup VM bridge br-ext..........................[OK]

49Cleanup VM bridge br-int-vmx1.....................[OK]

50==================================================

51 VMX Stop Completed

52==================================================

53Check VCP image...................................[OK]

54Check VFP image...................................[OK]

55VMX Model.........................................FPC

56Check VCP Config image............................[OK]

57Check management interface........................[OK]

58Setup huge pages to 32768.........................[OK]

59Attempt to kill libvirt...........................[OK]

60Attempt to start libvirt..........................[OK]

61Sleep 2 secs......................................[OK]

62Check libvirt support for hugepages...............[OK]

63==================================================

64 System Setup Completed

65==================================================

66Get Management Address of em1.....................[OK]

67Generate libvirt files............................[OK]

68Sleep 2 secs......................................[OK]

69Find configured management interface..............em1

70Find existing management gateway..................em1

71Check if em1 is already enslaved to br-ext........[No]

72Gateway interface needs change....................[Yes]

73Create br-ext.....................................[OK]

74Get Management Gateway............................10.85.4.1

75Flush em1.........................................[OK]

76Start br-ext......................................[OK]

77Bind em1 to br-ext................................[OK]

78Get Management MAC................................38:ea:a7:37:7c:54

79Assign Management MAC 38:ea:a7:37:7c:54...........[OK]

80Add default gw 10.85.4.1..........................[OK]

81Create br-int-vmx1................................[OK]

82Start br-int-vmx1.................................[OK]

83Check and start default bridge....................[OK]